Andreagiovanni Reina, Research Group Leader — GIO Lab — Group Intelligence and self-Organisation

Centre for the Advanced Study of Collective Behaviour, Universität Konstanz & Max Planck Institute of Animal Behavior, Germany

Technology and tools for improving swarm robotics experiments

While, on the one hand, robot swarms promise characteristics such as scalability, robustness, adaptivity and low-cost, on the other hand, robot swarms are complex to analyse, model and design because of the large number of nonlinear interactions among the robots. Mathematical and statistical tools to describe robot swarms are still under development, and a theoretical methodology to forecast the swarm dynamics given the individual robot behaviour is missing. As a consequence, it is common practice to resort to empirical studies to assess the performance of robot swarms. I have developed and deployed open-source tools to simplify and enheance experiments with robot swarms.

Augmented reality for robot swarms

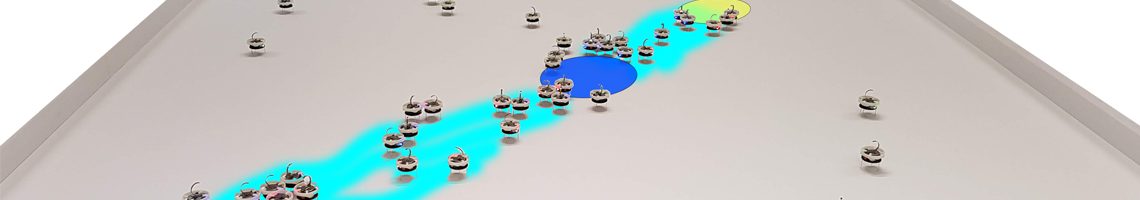

Experiments may be either in simulation or with physical hardware. The former are easier to run and less time-consuming than the latter. However, when experiments are performed only in simulation, they may not guarantee that the estimated performance matches the one measured with real hardware. In contrast, experiments with robots demonstrate and confirm that the investigated system functions on real devices, which include challenging aspects intrinsic of reality and out of the designer's control, such as noise and device failures. However, experimentation with physical hardware is expensive, both in terms of money and time. In addition, hardware modifications are impractical and often impossible to realise when time and money resources are limited. I believe that a viable solution to these issues is performing hybrid experiments that combine real robots with simulation. I have developed novel technology to endow a robot swarm with virtual sensors and actuators, immersing the robots in an augmented reality environment.

ARK: Augmented Reality for Kilobots

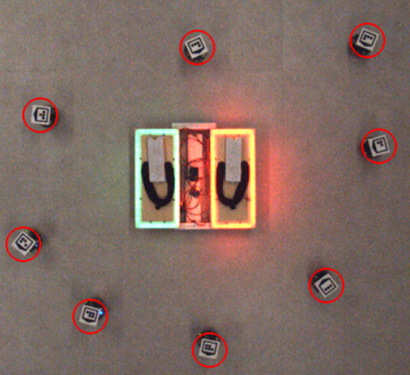

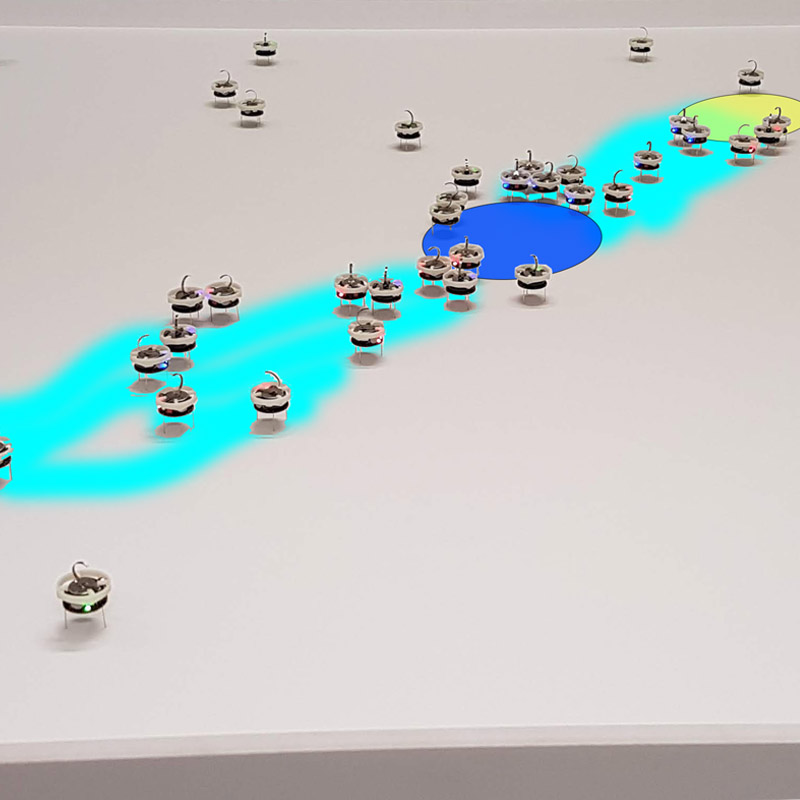

The video below showcases the functionalities of ARK, Augmented Reality for Kilobots, through three demos. In Demo A, ARK automatically assigns unique IDs to a swarm of 100 Kilobots. Demos B shows the possibility of employing ARK for the automatic positioning of 50 Kilobots, which is one of the typical preliminary operations in swarm robotics experiments. These operations are typically tedious and time consuming when done manually. ARK saves researchers' time and makes operating large swarms considerably easier. Additionally, automating the operation gives more accurate control of the robots' start positions and removes undesired biases in comparative experiments. Demo C shows a simple foraging scenario where 50 Kilobots collect material from a source location and deposit it at a destination. The robots are programmed to pick up one virtual flower inside the source area (green flower field), carry it to the destination (yellow nest), and deposit the flower there. When performing actions in the virtual environments, the robot signals by lighting its LED in blue. When picking up a virtual flower from the source, the robot reduces the source's size for the rest of the robots (by reducing the area’s diameter by 1cm). Similarly when a robot deposits flowers at its destination, the area increases by 1 cm. This demo shows that robots can perceive (and navigate) a virtual gradient, can modify the virtual environment by moving material from one location to another, and can autonomously decide when to change the virtual environment that they sense (either the source or the destination).More information are available at: http://diode.group.shef.ac.uk/kilobots/index.php/ARK

- A. Reina, A.J. Cope, E. Nikolaidis, J.A.R. Marshall, C. Sabo. ARK: Augmented Reality for Kilobots. IEEE Robotics and Automation Letters, 2(3): 1755-1761, 2017.

- M.S. Talamali, T. Bose, M. Haire, X. Xu, J.A.R. Marshall, A. Reina. Sophisticated Collective Foraging with Minimalist Agents: A Swarm Robotics Test Swarm Intelligence 14(1):in press, 2020

- A. Font Llenas, M.S. Talamali, X. Xu, J.A.R. Marshall, A. Reina. Quality-sensitive foraging by a robot swarm through virtual pheromone trails. In Proceedings of 11th International Conference on Swarm Intelligence (ANTS), LNCS 11172: 135--149. Springer, Cham, 2018. (Best Paper Award)

- E. Nikolaidis, C. Sabo, J. A. R. Marshall, A. Reina. Characterisation and upgrade of the communication between overhead controllers and Kilobots, Figshare, The University of Sheffield, May 2017

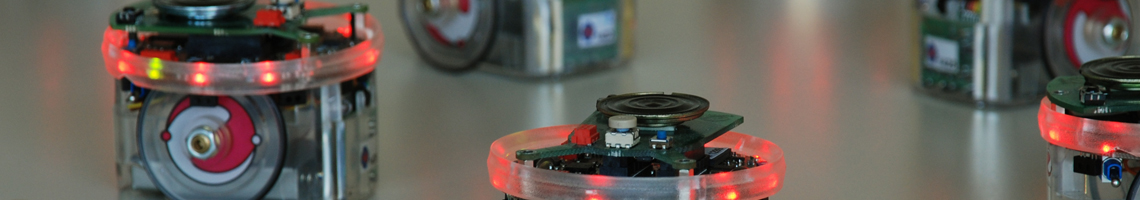

Augmented Reality for E-pucks

The video below illustrates the proposed virtual sensing technology through an experiment involving 15 e-pucks. In this experiment, the robots are equipped with a virtual pollutant sensor. The pollutant is simulated via the ARGoS simulator. The sensor returns a binary value indicating whether there is a presence of pollutant at the robot location. In our experiment, we assume that the pollutant is present in an area within a diffusion cone that we show in the video through a green overlay area. The robots move randomly within an hexagonal arena and, when a robot perceives the pollutant (i.e., it lies within the diffusion cone), it stops and lights up its red LEDs with probability P=0.3 per timestep.- A. Reina, M. Salvaro, G. Francesca, L. Garattoni, C. Pinciroli, M. Dorigo and M. Birattari. Augmented reality for robots: virtual sensing technology applied to a swarm of e-pucks. In Proceedings of the IEEE 2015 NASA/ESA Conference of Adaptive Hardware and Systems (AHS). IEEE Press, ID:sB_p3, 6 pages, 2015.

- M. Salvaro. Virtual sensing technology applied to a swarm of autonomous robots. Design and implementation of the communication infrastructure and realization of two virtual sensors. MSc Thesis in Computer Science Engineering, Università di Bologna, Italy, 2015. (Supervisors: M. Milano, M. Birattari, A. Reina and G. Francesca)

Automatic robot placement

Through the virtual sensing technology, we developed a tool for automatic robot placement. This tool eases the setup of experiments by automatically navigating robots through the environment till the user-defined final destination. This functionality is particularly useful when conducting extensive real robot experiments that require a controlled initial formation of robot. An alternative application of this tool is the generation of artistic coordinated motion of robots.

- Automatic placement code for ARK (Kilobots): https://diode.group.shef.ac.uk/kilobots/index.php/Experiments_Source_Code

- B. Mayeur. A tool for automatic robot placement in swarm robotics experiments. MSc Thesis in Information Technology, Universitè Libre de Bruxelles, Brussels, Belgium, 2014. (in French) Supervisors: M. Birattari, G. Francesca and A. Reina.

Physics-based simulator for the Kilobot robots

Kilobot simulator: https://github.com/ilpincy/argos3-kilobot

The Kilobot is a popular platform for swarm robotics research due to its low cost and ease of manufacturing. Despite this, the effort to bootstrap the design of new behaviours and the time necessary to develop and debug new behaviours is considerable. To make this process less burdensome, high-performing and flexible simulation tools are important. I have co-developed a plugin for the ARGoS simulator designed to simplify and accelerate experimentation with Kilobots. First, the plugin supports cross-compiling against the real robot platform, removing the need to translate algorithms across different languages. Second, it is highly configurable to match the real robot behaviour. Third, it is fast and allows running simulations with several hundreds of Kilobots in a fraction of real time.

This video shows an experiment in which 50 kilobots diffuse and pick up food. The experiment is performed with the ARGoS simulator (left) and with real robots (right). The simulation closely resembles its real counterpart.

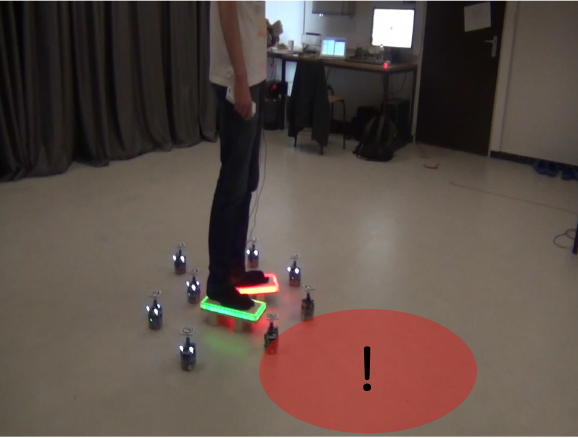

Wearable device for human-robot interaction

We designed and implemented a wearable device to allow an e-puck robot to localise a human. This tool is a pair of e-Geta, designed after the Japanese geta footwear. Each e-Geta has a LED ring (red for the left foot and green for the right foot) that glows when the human leans his/her foot activating the switch under the e-Geta's heel. The height of the LED ring is similar to the one of the epuck LED ring, so that the same camera calibration parameters may be valid for localising both other robots and humans through the e-Geta. During the movement of the foot, the eGeta switches off its LEDs to reduce unreliable readings that may be caused by vertical shifting. We tested this tool in a robot swarm-human interaction case study. In this study, the innovative idea has been to allow the robot swarm to control the human. The underlying idea is having robots capable to perceive environmental features that a human cannot. In case of hazardous features, the swarm has the role of escorting the human preventing him/her to step into dangerous areas. In our work, the swarm encircles the human and signals both through LEDs and physically obstructing the movements the presence of nearby forbidden areas.

- A. Debruyn. Human-Swarm Interaction: An Escorting Robot Swarm that Diverts a Human away from Dangers one cannot perceive. MSc Thesis in Computer Engineering, Universitè Libre de Bruxelles, Brussels, Belgium, 2015. Supervisors: M. Birattari, G. Podevijn and A. Reina.

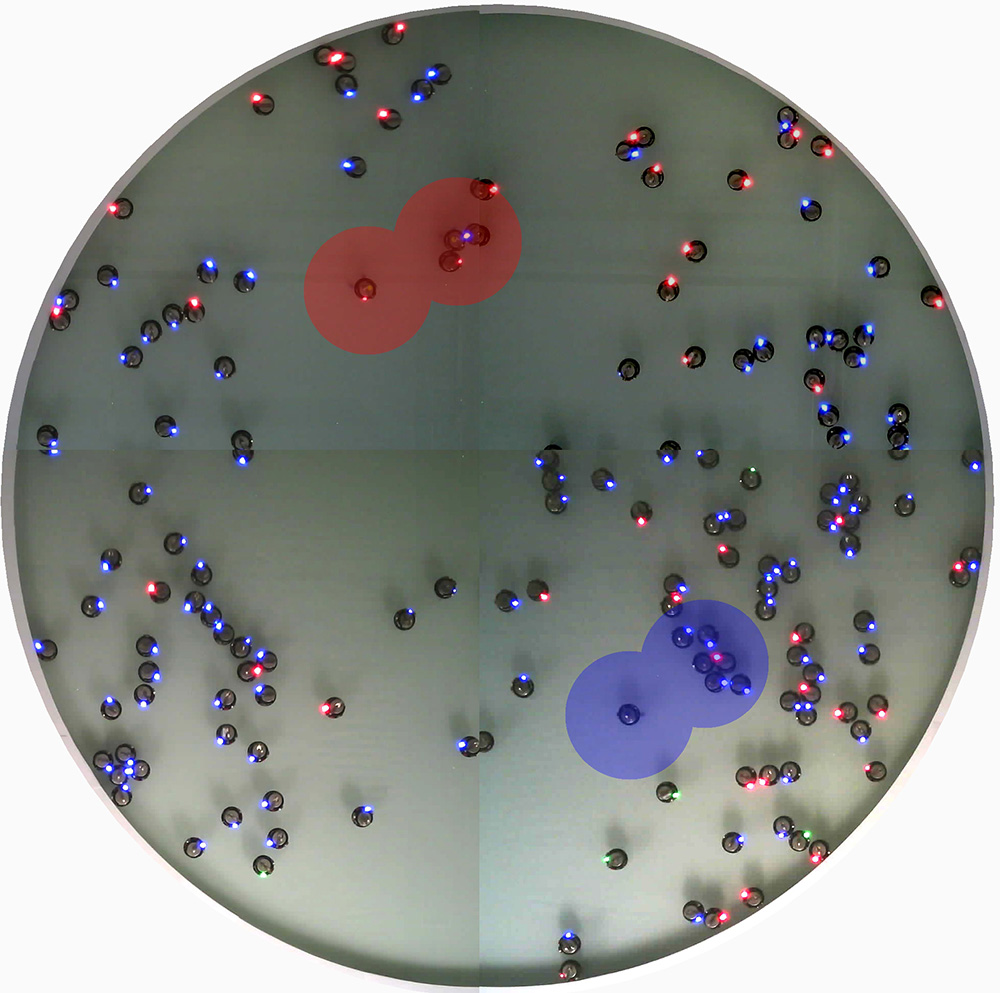

IRIDIA's Arena Tracking System

The main purpose of the tracking system we implemented at the IRIDIA Lab is to provide a tool that allows a researcher to record and control the state of the experiment throughout its complete execution. Other than experimental analysis, the tracking system has also been extended to allow augmented reality for robots.

- A. Stranieri, A.E. Turgut, G. Francesca, A. Reina, M. Dorigo, M. Birattari. IRIDIA's Arena Tracking System. Technical Report TR/IRIDIA/2011-020, IRIDIA, Universitè Libre de Bruxelles, Brussels, Belgium, 2013.

Engineering distributed decision-making

|

Value-sensitive

decision-making |

Collective foraging with minimalist agents

|

Swarm Awareness

|